Content moderation has become one of the most critical aspects of managing online communities.

In a world where user-generated content is the foundation of many platforms, ensuring that the interactions remain respectful, safe, and within guidelines is essential. Whether you are running a social media group, a gaming server, a forum, or even a dedicated platform for professional discussions, moderation is key to fostering an environment where users feel secure and valued.

In this comprehensive guide, we will explore:

Why content moderation is essential for the health of online communities.

The different types of content that require moderation.

Common challenges faced by community managers when moderating content.

How AI-driven solutions, like Watchdog, can transform moderation practices.

Best practices for effective community management.

Let’s dive into each aspect and understand how content moderation, particularly through tools like Watchdog, can help safeguard and scale your online community.

The Role of Content Moderation in Community Health

Content moderation ensures that online platforms remain safe, engaging, and aligned with community values. Without proper moderation, harmful behaviors such as harassment, misinformation, hate speech, and spam can thrive. This not only harms the individual user experience but also erodes the overall trust in the platform.

Why Is Content Moderation Important?

Protecting Users from Harmful Content: In a world where information spreads rapidly, online communities are increasingly vulnerable to harmful content. This includes abusive language, bullying, harassment, explicit material, and violent imagery. Protecting users—especially minors—from exposure to such content is a priority for any community leader.

Maintaining the Community’s Integrity: A community thrives when its members share a common understanding of acceptable behavior. Content moderation helps uphold this integrity by ensuring that users who violate the rules are flagged, warned, or removed. It sends a clear message that disruptive behavior will not be tolerated.

Legal and Ethical Responsibilities: With global regulations like the GDPR and evolving legal frameworks around user privacy and online behavior, platforms must ensure that they comply with laws and avoid the risks of hosting illegal content. Effective moderation also demonstrates a commitment to ethical standards.

Enhancing User Experience and Engagement: When users feel that the community is well-moderated, they are more likely to participate and contribute. A platform that is filled with spam or offensive content will drive away genuine participants. By keeping discussions civil and on-topic, content moderation increases overall user engagement and satisfaction.

Types of Content That Require Moderation

Moderating content isn’t just about filtering out offensive language. A wide variety of content requires scrutiny to ensure that it aligns with community guidelines. Let’s explore some key content types that need moderation.

1. Text-Based Content

The most common form of user-generated content is text. Whether it’s comments, posts, or messages, text-based contributions need to be regularly monitored to ensure they do not contain:

Harassment and hate speech: Harmful language directed toward specific individuals or groups.

Spam: Repetitive, irrelevant content that clutters discussions and reduces the quality of engagement.

Misinformation: False or misleading information, particularly in sensitive topics like health, politics, and public safety.

2. Images and Videos

With the rise of visual content across social platforms, images and videos have become integral parts of online communities. However, visual content can also pose significant challenges:

Explicit and inappropriate material: Content containing nudity, violence, or other forms of explicit material that may violate community standards.

Misleading imagery: Edited or doctored images can spread misinformation or deceive users about important topics.

Unauthorized use of copyrighted content: Using images or videos without permission can result in legal consequences for both the user and the platform.

3. Links and Embedded Media

Users often share links to external content, which can lead to the spread of harmful, misleading, or inappropriate content outside the direct control of the platform. Moderating links and embedded media ensures that:

Users do not share links to dangerous websites (e.g., phishing sites).

Embedded media aligns with the platform’s content policies.

The community is protected from external sources of misinformation.

4. User Behavior and Interactions

Moderation extends beyond the content itself. The way users interact with each other—through likes, dislikes, upvotes, and reports—can indicate trends or patterns of toxic behavior. Ensuring fair and healthy interactions is key to fostering an inclusive community.

Challenges of Manual Content Moderation

For many online platforms, moderation has traditionally been handled by human moderators. However, manual moderation is fraught with challenges, especially as communities grow in size.

1. Time-Consuming

Manually reviewing posts, comments, images, and videos is highly labor-intensive. As the volume of content increases, the workload becomes overwhelming for moderators. This can lead to delays in reviewing and acting on harmful content, putting users at risk.

2. Inconsistent Application of Rules

Humans are subjective, and different moderators may interpret the same guidelines in varying ways. This inconsistency can frustrate users who expect a fair and transparent application of rules across the board.

3. Moderator Burnout

Constantly reviewing negative content takes a toll on moderators. Exposure to disturbing or harmful material can lead to emotional exhaustion and burnout, which affects their ability to make sound decisions.

4. Scale Limitations

A rapidly growing community may outpace the capabilities of even a large moderation team. With hundreds or thousands of posts made every minute, manual moderation simply cannot keep up.

The Rise of AI-Powered Content Moderation

Given the limitations of manual moderation, many online communities are turning to AI-powered solutions to automate the process and scale efficiently.

How Does AI-Based Content Moderation Work?

AI-powered moderation tools use machine learning algorithms to scan user-generated content in real-time. These algorithms are trained to detect patterns in text, images, and videos that indicate rule violations or inappropriate behavior.

Key Advantages of AI in Content Moderation

Speed and Efficiency: AI can analyze large volumes of content instantly, flagging and removing inappropriate posts in real-time. This ensures that harmful content is dealt with before it can cause damage to the community.

Consistency: AI applies rules consistently across all users, eliminating the subjectivity and potential biases of human moderators. This leads to a fairer and more transparent moderation process.

Scalability: As a community grows, AI scales seamlessly. No matter how much content is generated, AI can continue to moderate efficiently without additional human resources.

Focus on Edge Cases: AI can handle the bulk of content moderation, allowing human moderators to focus on edge cases and more complex situations that require nuanced judgment.

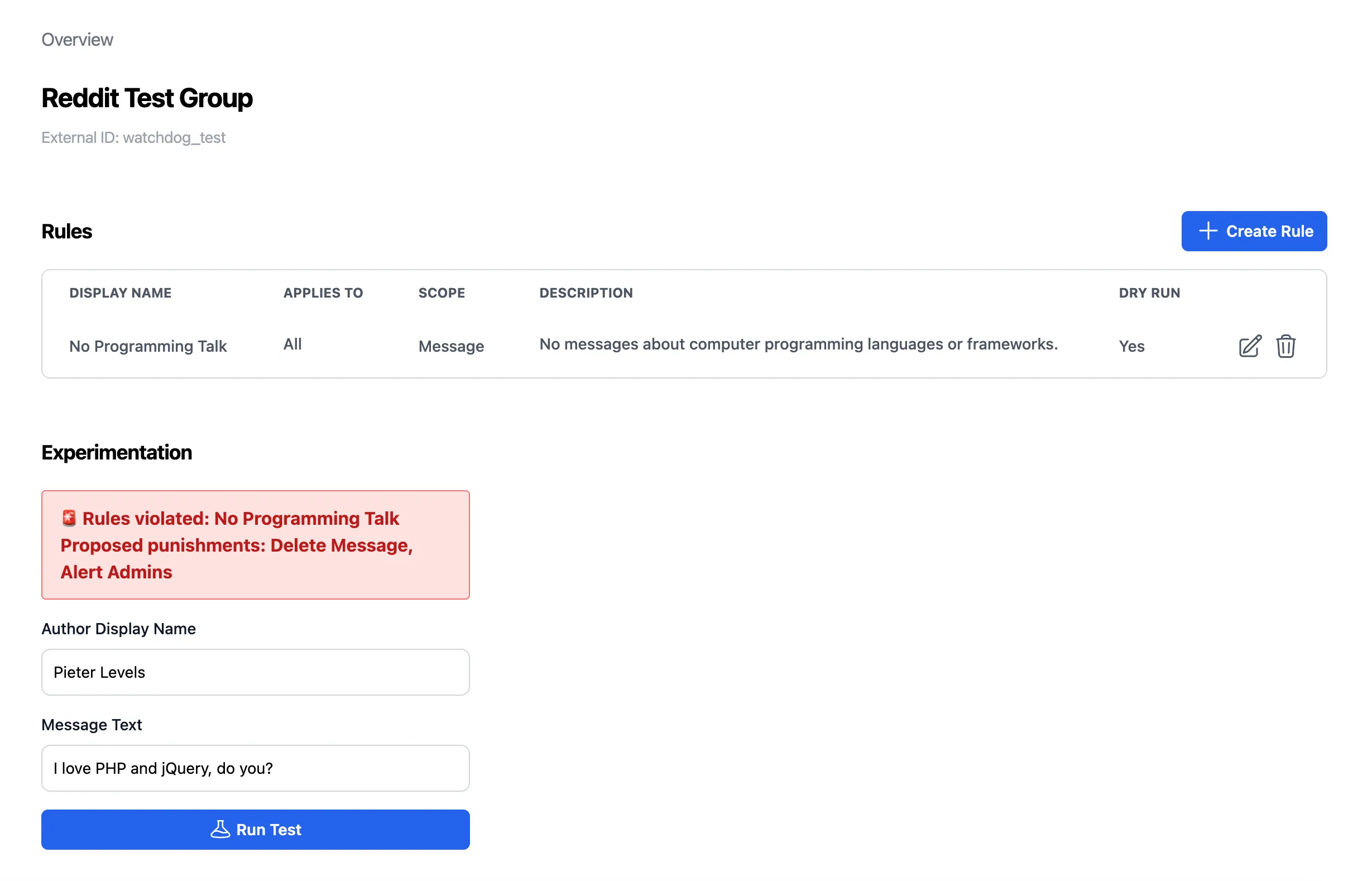

Watchdog: Revolutionizing Content Moderation for Communities

As an AI-powered moderation tool, Watchdog provides community managers with the ability to monitor and moderate their platforms more effectively and efficiently.

What Makes Watchdog Different?

Customizable Moderation Rules: Every community is unique, and Watchdog understands this. With customizable rules, community managers can tailor Watchdog to flag specific types of content that violate their guidelines.

Real-Time Moderation: Watchdog works around the clock to monitor content in real-time, identifying and addressing rule violations the moment they happen.

Multi-Content Support: Watchdog can analyze text, images, videos, and links, providing comprehensive coverage for all forms of content that your community may produce.

Actionable Insights: Watchdog not only moderates but also provides analytics and insights to help you understand the behavior of your community. This data can help managers refine their community guidelines and improve user engagement.

Scalable Solution: Whether your community has a few hundred users or millions, Watchdog scales to meet your needs, growing with your platform as it expands.

Use Cases for Watchdog

Gaming Communities: Watchdog helps gaming platforms maintain a fun and safe environment by moderating in-game chat, forums, and user interactions, ensuring that toxicity and hate speech are minimized.

Social Networks: On platforms where users interact frequently through posts and comments, Watchdog ensures that discussions remain respectful, informative, and free of spam or misinformation.

Professional Forums: In professional and educational communities, Watchdog ensures that the platform remains focused on constructive and relevant conversations by filtering out off-topic and inappropriate content.

Best Practices for Effective Content Moderation

Even with powerful AI tools like Watchdog, there are several best practices that community managers should follow to ensure that their moderation efforts are as effective as possible.

1. Clearly Defined Guidelines

Ensure that your community guidelines are clear, concise, and accessible. Users should know exactly what is and isn’t allowed, which helps prevent rule violations before they occur.

2. Transparent Enforcement

Be transparent about how rules are enforced and how users can appeal moderation decisions. Trust is built when users feel that the system is fair and accountable.

3. Continuous Training of AI Models

AI models should be continuously updated and trained to recognize new forms of harmful content, especially as online behavior evolves. Watchdog stays up-to-date with these trends, but regular input from community managers helps ensure maximum effectiveness.

4. Feedback from Human Moderators

While AI can handle the bulk of moderation, human oversight is still necessary. Feedback from human moderators can improve the AI’s accuracy and help refine the moderation process.

Conclusion

Content moderation is no longer a luxury; it’s a necessity for online communities to thrive. As platforms grow, the challenges of manual moderation become evident, making automated solutions like Watchdog indispensable. By leveraging AI-driven moderation, community managers can ensure a safer, more respectful environment for users while scaling their efforts efficiently.

With real-time moderation, customizable rules, and scalable support for various types of content, Watchdog is the ideal partner for any community looking to enhance its content moderation efforts.

👉 Are you ready to take your community moderation to the next level? Try Watchdog today and discover how AI can transform the way you manage your online platform.